Author: Eli5DeFi

Compiled by: Tim, PANews

PANews Editor's Note: On November 25th, Google's total market capitalization reached a record high of $3.96 trillion. Factors contributing to this surge in stock price included the newly released, most powerful AI chip, the Gemini 3, and its self-developed TPU chip. Beyond AI, the TPU will also play a significant role in blockchain technology.

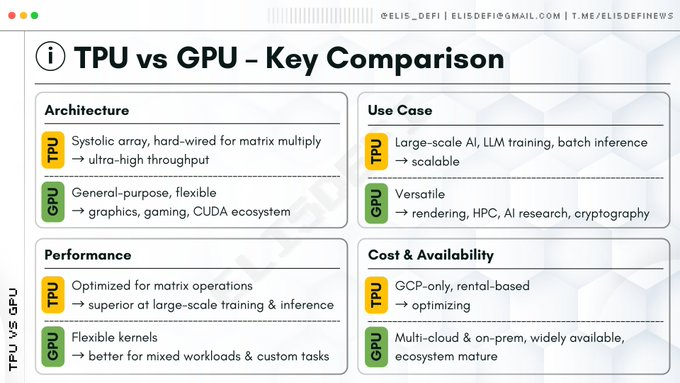

The hardware narrative of modern computing has been largely defined by the rise of the GPU.

From gaming to deep learning, NVIDIA's parallel architecture has become an industry-recognized standard, causing CPUs to gradually shift to a co-management role.

However, as AI models encounter scaling bottlenecks and blockchain technology moves towards complex cryptographic applications, a new competitor, the Tensor Processor (TPU), has emerged.

Although TPU is often discussed within the framework of Google's AI strategy, its architecture unexpectedly aligns with the core needs of post-quantum cryptography, the next milestone in blockchain technology.

This article explains, by reviewing the evolution of hardware and comparing architectural features, why TPUs (rather than GPUs) are better suited to handle the intensive mathematical operations required by post-quantum cryptography when building decentralized networks resistant to quantum attacks.

Hardware Evolution: From Serial Processing to Pulsating Architecture

To understand the importance of TPU, you need to first understand the problems it solves.

- Central Processing Unit (CPU): As an all-rounder, it excels at serial processing and logical branching operations, but its role is limited when it is necessary to perform massive mathematical operations simultaneously.

- Graphics Processing Unit (GPU): As an expert in parallel processing, it was originally designed to render pixels, and therefore excels at executing a large number of identical tasks simultaneously (SIMD: Single Instruction Multiple Data). This characteristic made it a mainstay of the early explosion of artificial intelligence.

- Tensor Processor (TPU): A specialized chip designed by Google specifically for neural network computing tasks.

Advantages of Pulsating Architecture

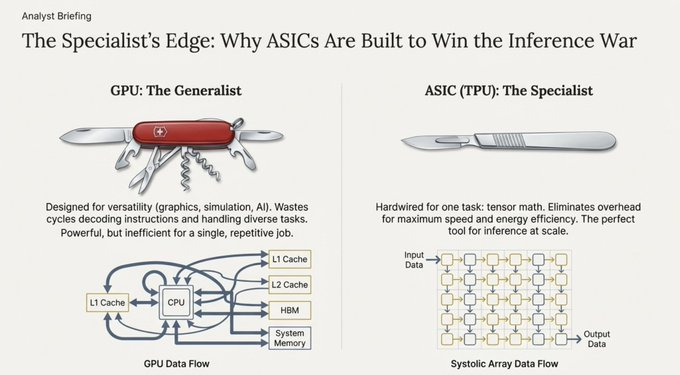

The fundamental difference between GPUs and TPUs lies in their data processing methods.

GPUs require repeated access to memory (registers, cache) for computation, while TPUs employ a pulsating architecture. This architecture, like a heart pumping blood, causes data to flow through a large-scale computing cell grid in a regular pulsating manner.

https://www.ainewshub.org/post/ai-inference-costs-tpu-vs-gpu-2025

The calculation results are directly passed to the next computation unit without needing to be written back to memory. This design greatly alleviates the von Neumann bottleneck, which is the latency caused by the repeated movement of data between memory and the processor, thereby achieving an order-of-magnitude increase in throughput for specific mathematical operations.

The key to post-quantum cryptography: Why does blockchain need TPU?

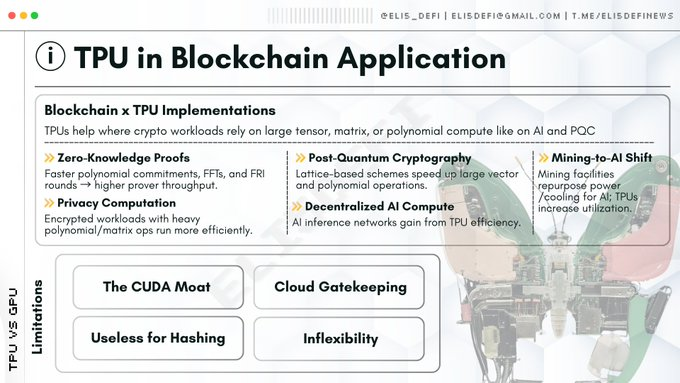

The most critical application of TPU in the blockchain field is not mining, but cryptographic security.

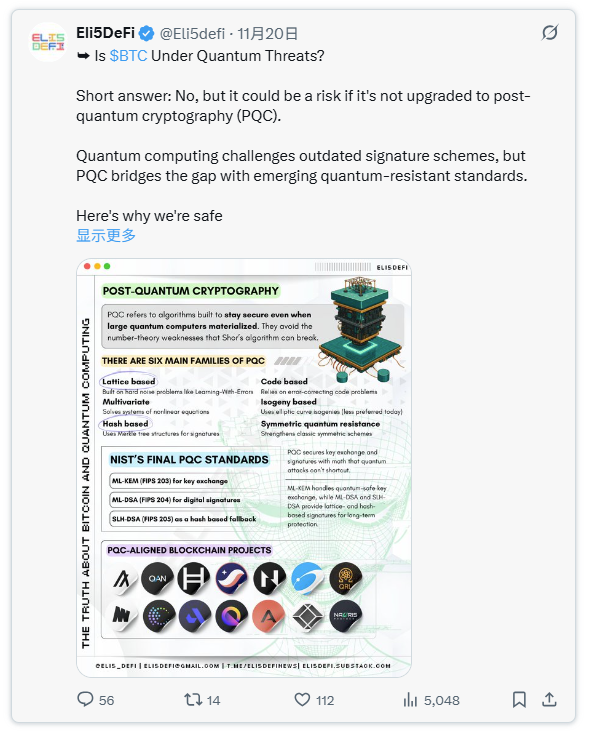

Current blockchain systems rely on elliptic curve cryptography or RSA encryption, which have fatal weaknesses when dealing with Shor's algorithm. This means that once a sufficiently powerful quantum computer becomes available, an attacker could deduce the private key from the public key, potentially wiping out all crypto assets on Bitcoin or Ethereum.

The solution lies in post-quantum cryptography. Currently, mainstream PQC standard algorithms (such as Kyber and Dilithium) are all based on Lattice cryptography.

Mathematical fit of TPU

This is precisely the advantage of TPUs over GPUs. Lattice cryptography heavily relies on intensive operations on large matrices and vectors, primarily including:

- Matrix-vector multiplication: As + e (where A is a matrix, and s and e are vectors).

- Polynomial operations: algebraic operations based on rings, usually implemented using number theory transformations.

Traditional GPUs treat these computations as general-purpose parallel tasks, while TPUs achieve dedicated acceleration through hardware-level fixed matrix computation units. The mathematical structure of Lattice cryptography and the physical construction of the TPU's pulsating array form an almost seamless topological mapping.

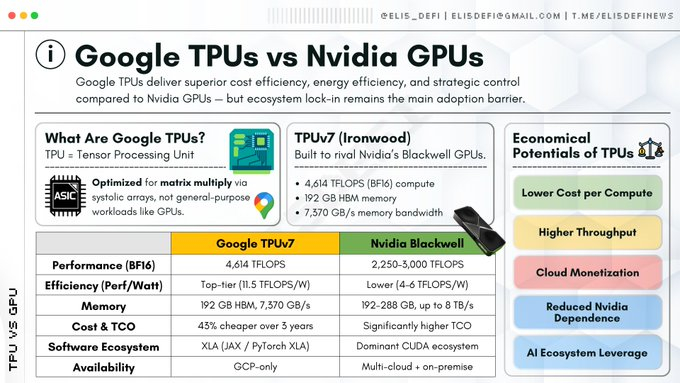

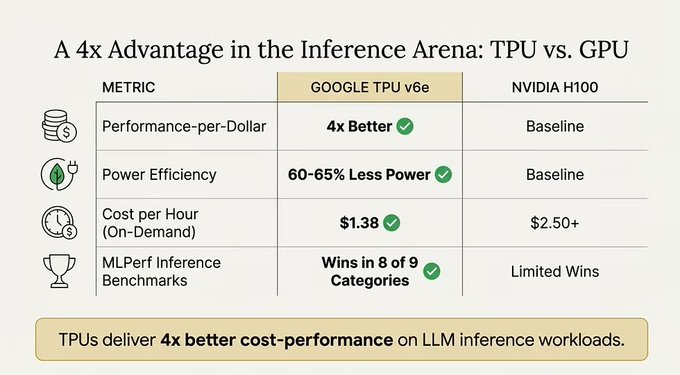

The technical battle between TPU and GPU

While GPUs remain the industry's universal king of all trades, TPUs have a clear advantage when handling specific math-intensive tasks.

Conclusion: GPUs excel in versatility and ecosystem, while TPUs have an advantage in intensive linear algebra computation efficiency, which is the core mathematical operation upon which AI and modern advanced cryptography rely.

TPU Extends the Narrative: Zero-Knowledge Proofs and Decentralized AI

Besides post-quantum cryptography, TPUs have also shown application potential in two other key areas of Web3.

Zero-knowledge proof

ZK-Rollups (such as Starknet or zkSync), as scaling solutions for Ethereum, require massive computations in their proof generation process, mainly including:

- Fast Fourier Transform: Enables rapid conversion of data representation formats.

- Multiscalar multiplication: Implementing point operations on elliptic curves.

- FRI Protocol: Cryptographic Proof System for Verifying Polynomials

These types of operations are not hash calculations, which ASICs excel at, but rather polynomial mathematics. Compared to general-purpose CPUs, TPUs can significantly accelerate FFT and polynomial commitment operations; and because these algorithms have predictable data flow characteristics, TPUs can typically achieve higher efficiency acceleration than GPUs.

With the rise of decentralized AI networks such as Bittensor, network nodes need to have the ability to run AI model inference. Running a general-purpose large language model is essentially performing massive matrix multiplication operations.

Compared to GPU clusters, TPUs enable decentralized nodes to process AI inference requests with lower energy consumption, thereby improving the commercial viability of decentralized AI.

TPU Ecosystem

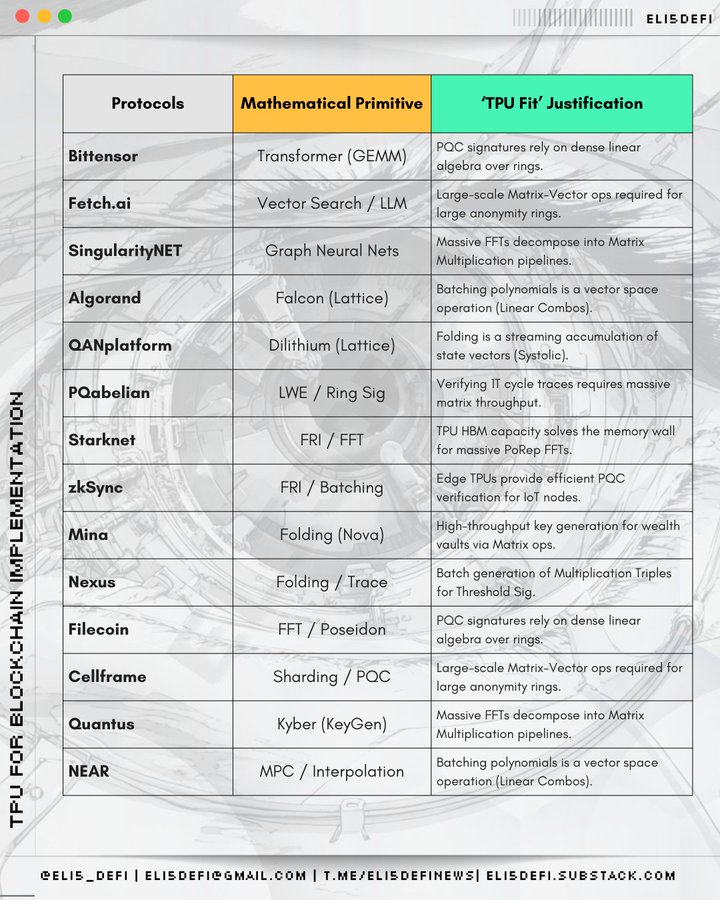

Although most projects still rely on GPUs due to the widespread adoption of CUDA, the following areas are poised for TPU integration, especially within the narrative framework of post-quantum cryptography and zero-knowledge proofs.

Zero-knowledge proofs and scaling solutions

Why choose TPU? Because ZK proof generation requires massively parallel processing of polynomial operations, and under certain architecture configurations, TPUs are far more efficient than general-purpose GPUs at handling such tasks.

- Starknet (two-layer expansion scheme): STARK proofs heavily rely on Fast Fourier Transform and Fast Reed-Solomon interactive oracle proofs, and these computationally intensive operations are highly compatible with the computational logic of TPU.

- zksync (two-layer scaling solution): Its Airbender prover needs to handle large-scale FFT and polynomial operations, which is the core bottleneck that TPU can crack.

- Scroll (two-layer expansion scheme): It adopts the Halo2 and Plonk proof system, and its core operation KZG commitment verification and multi-scalar multiplication can perfectly match the pulse architecture of TPU.

- Aleo (Privacy-Preserving Public Chain): Focuses on zk-SNARK zero-knowledge proof generation, and its core operations rely on polynomial mathematical characteristics that are highly compatible with the dedicated computing throughput of TPU.

- Mina (lightweight public blockchain): It adopts recursive SNARKs technology. Its mechanism for continuously regenerating proofs requires repeated execution of polynomial operations. This characteristic highlights the high-efficiency computing value of TPU.

- Zcash (privacy coin): The classic Groth16 proof system relies on polynomial operations. Although it is an early technology, high-throughput hardware still allows it to benefit significantly.

- Filecoin (DePIN, storage): Its proof-of-replication mechanism verifies the validity of stored data through zero-knowledge proofs and multinomial coding techniques.

Decentralized AI and Agent Computing

Why choose a TPU? This is precisely the native application scenario for TPUs, designed specifically to accelerate neural network machine learning tasks.

- Bittensor's core architecture is decentralized AI inference, which perfectly matches the tensor computing capabilities of the TPU.

- Fetch (AI Agent): Autonomous AI agents rely on continuous neural network inference to make decisions, and TPUs can run these models with lower latency.

- Singularity (AI Service Platform): As an artificial intelligence service trading marketplace, Singularity significantly improves the speed and cost-effectiveness of underlying model execution by integrating TPUs.

- NEAR (Public Chain, AI Strategic Transformation): The transformation towards on-chain AI and trusted execution environment proxy, the tensor operations it relies on require TPU acceleration.

Post-quantum cryptography networks

Why choose TPU? The core operations of post-quantum cryptography often involve the problem of finding the shortest vector in a lattice. These tasks, which require dense matrix and vector operations, are highly similar to AI workloads in terms of computational architecture.

- Algorand (public blockchain): It adopts a quantum-safe hashing and vector operation scheme, which is highly compatible with the parallel mathematical computing capabilities of TPU.

- QAN (Quantum Resistant Public Chain): Employs Lattice cryptography, whose underlying polynomial and vector operations are highly isomorphic to the mathematical optimization field that TPU specializes in.

- Nexus (computing platform, ZkVM): Its quantum-resistant computational preparation involves polynomial and lattice basis algorithms that can be efficiently mapped onto the TPU architecture.

- Cellframe (Quantum-resistant public blockchain): The Lattice cryptography and hash encryption technology it uses involve tensor-like operations, making it an ideal candidate for TPU acceleration.

- Abelian (privacy token): focuses on post-quantum cryptography Lattice operations. Similar to QAN, its technical architecture fully benefits from the high throughput of TPU vector processors.

- Quantus (public blockchain): Post-quantum cryptographic signatures rely on large-scale vector operations, and TPUs have a much higher parallelization capability for handling such operations than standard CPUs.

- Pauli (Computing Platform): Quantum-safe computing involves a large number of matrix operations, which is precisely the core advantage of the TPU architecture.

Development bottleneck: Why has TPU not yet been widely adopted?

If TPUs are so efficient in post-quantum cryptography and zero-knowledge proofs, why is the industry still scrambling to buy H100 chips?

- CUDA Moat: NVIDIA's CUDA software library has become an industry standard, and the vast majority of cryptography engineers program based on CUDA. Porting code to the JAX or XLA frameworks required by TPUs is not only technically challenging but also requires a significant investment of resources.

- Cloud platform entry barriers: High-end TPUs are almost exclusively monopolized by Google Cloud. Decentralized networks that rely too heavily on a single centralized cloud service provider will face censorship risks and single points of failure.

- Rigid architecture: If cryptographic algorithms require fine-tuning (such as introducing branching logic), TPU performance will drop sharply. GPUs, on the other hand, are far superior to TPUs in handling such irregular logic.

- Limitations of hash operations: TPUs cannot replace Bitcoin mining machines. The SHA-256 algorithm involves bit-level operations rather than matrix operations, rendering TPUs useless in this area.

Conclusion: Layered architecture is the future.

The future of Web3 hardware is not a winner-takes-all competition, but rather an evolution towards a layered architecture.

GPUs will continue to play a leading role in general computing, graphics rendering, and tasks requiring complex branching logic.

TPUs (and similar ASIC-based accelerators) will gradually become the standard configuration for the Web3 "mathematics layer," specifically designed to generate zero-knowledge proofs and verified quantum cryptographic signatures.

As blockchains migrate to post-quantum security standards, the massive matrix operations required for transaction signing and verification will make the pulse architecture of TPUs no longer an option, but an essential infrastructure for building scalable quantum-safe decentralized networks.