A work of art is never completed, only abandoned.

Everyone is talking about AI Agent, but they are not talking about the same thing. This leads to differences between the AI Agent we care about and the perspective of the general public and AI practitioners.

A long time ago, I wrote that Crypto is an illusion of AI. From then until now, the combination of Crypto and AI has always been a one-sided love. AI practitioners rarely mention the terms Web3/blockchain, while Crypto practitioners However, I am deeply in love with AI. After seeing the wonder that AI Agent frameworks can be tokenized, I wonder whether AI practitioners can be truly introduced into our world.

AI is the agent of Crypto. This is the best commentary on this round of AI craze from the perspective of encryption. Crypto's enthusiasm for AI is different from other industries. We particularly hope to integrate the issuance and operation of financial assets with it.

Agent evolution, the origin of technical marketing

At its root, AI Agent has at least three sources, and OpenAI's AGI (artificial general intelligence) lists it as an important step, making the word a buzzword beyond the technical level, but in essence, Agent is not a new concept, even with the addition of It is hard to say that AI empowerment is a revolutionary technological trend.

One is the AI Agent in the eyes of OpenAI, which is similar to L3 in the autonomous driving classification. AI Agent can be regarded as having certain high-level assisted driving capabilities, but it cannot completely replace humans.

Image caption: OpenAI's planned AGI stages Image source: https://www.bloomberg.com/

Second, as the name implies, AI Agent is an agent under the blessing of AI. Agent mechanisms and models are not uncommon in the computer field. Under OpenAI's plan, Agent will become a new form of AI after dialogue (ChatGPT), reasoning (various bots) and other forms of AI. The later L3 stage is characterized by "autonomous behavior", or as defined by LangChain founder Harrison Chase: "AI Agent is a system that uses LLM to make control flow decisions for programs."

This is the mystery. Before the emergence of LLM, Agent mainly executed the automated process set by humans. For example, when programmers designed crawlers, they would set User-Agent to simulate the real The browser version and operating system used by the user, etc. Of course, if AI Agent is used to imitate human behavior more carefully, an AI Agent crawler framework will appear, which will make the crawler "more like a human".

In such a change, the addition of AI Agents must be combined with existing scenarios. There is almost no completely original field. Even code completion and generation capabilities such as Curosr and Github copilot are based on LSP (Language Server Protocol). Protocol) and other ideas, there are many examples of this:

- Apple: AppleScript (Script Editor) -- Alfred -- Siri -- Shortcuts -- Apple Intelligence

- Terminal: Terminal (macOS)/Power shell (Windows)--iTerm 2--Warp(AI Native)

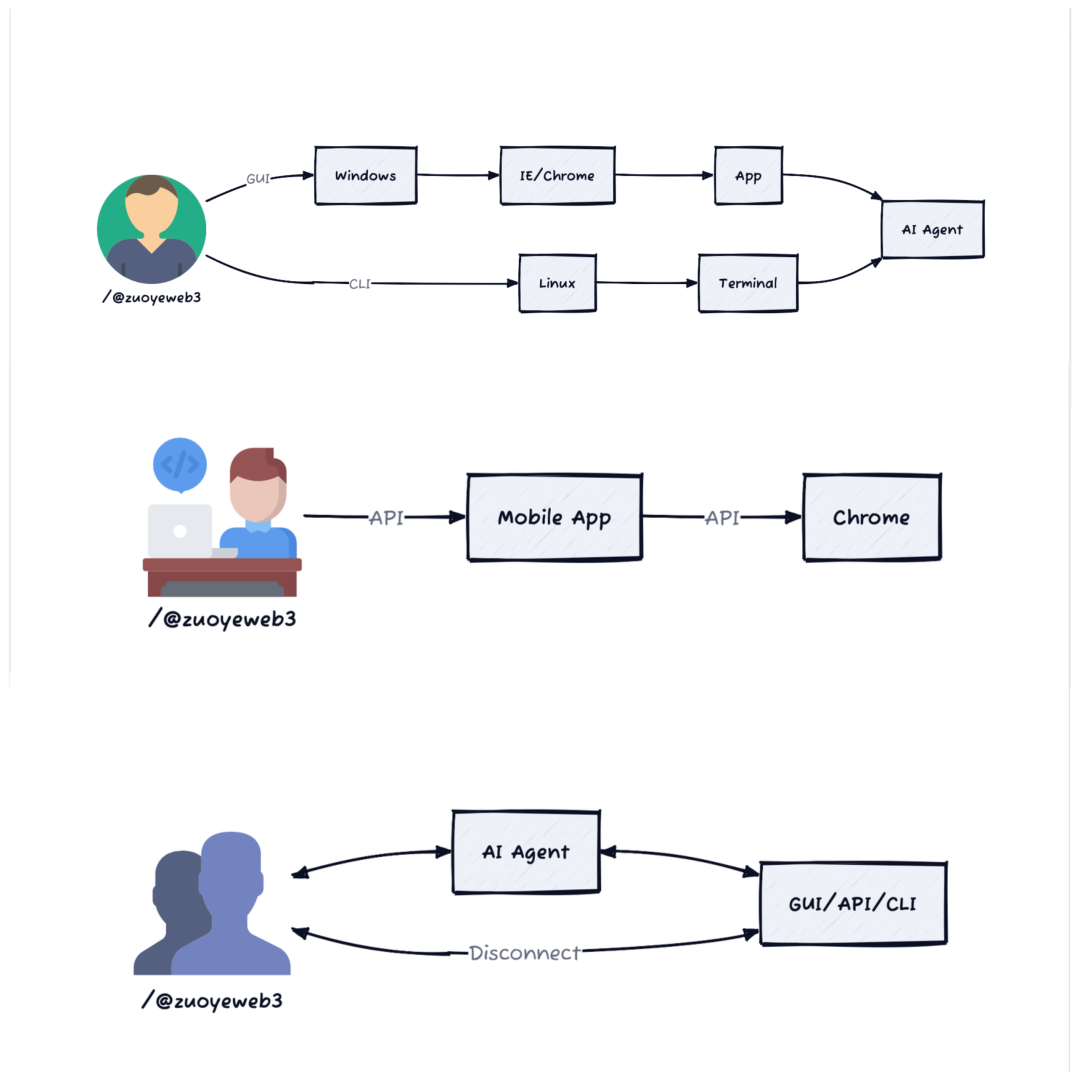

- Human-computer interaction: Web 1.0 CLI TCP/IP Netscape Navigator --Web 2.0 GUI/RestAPI/Search Engine/Google/Super App --Web 3.0 AI Agent + dapp?

To explain briefly, in the process of human-computer interaction, the combination of Web 1.0 GUI and browser really allows the public to use computers without barriers, represented by the combination of Windows + IE, while API is the data abstraction and transmission standard behind the Internet. In the Web 2.0 era, The browser has entered the era of Chrome, and the shift to mobile terminals has changed people's Internet usage habits. Apps from super platforms such as WeChat and Meta cover all aspects of people's lives.

Third, the concept of intent in the Crypto field is the forerunner of the explosion of AI Agents in the circle. However, it should be noted that this is only valid within Crypto. From the incomplete Bitcoin script to the Ethereum smart contract, the Agent concept itself is The cross-chain bridge that was subsequently spawned - chain abstraction, EOA-AA wallet are all natural extensions of this kind of thinking, so it is not surprising that after AI Agent "invades" Crypto, it leads to the DeFi scenario.

This is where the concept of AI Agent is confusing. In the context of Crypto, what we actually want to achieve is an agent that "automatically manages finances and automatically buys new Memes." However, according to OpenAI's definition, such a dangerous scenario even requires L4. /L5 can be truly realized, and then the public is playing with functions such as automatic code generation or AI one-click summary and ghostwriting, and the communication between the two sides is not in the same dimension.

Now that we understand what we really want, let's focus on the organizational logic of AI Agent. The technical details will be hidden behind it. After all, the agent concept of AI Agent is to remove the obstacles to large-scale popularization of technology, just like browsing The AI Agent has turned stones into gold for the personal PC industry, so our focus will be on two points: looking at AI Agent from the perspective of human-computer interaction, and the difference and connection between AI Agent and LLM, which leads to the third part: Crypto and AI Agent What is left in the end.

let AI_Agent = LLM+API;

Before the chat-based human-computer interaction mode such as ChatGPT, the interaction between humans and computers was mainly in the form of GUI (graphical user interface) and CLI (command-line interface). GUI thinking continued to derive browsers, App and other specific forms, the combination of CLI and Shell rarely changes.

But this is only the human-computer interaction on the "front end". With the development of the Internet, the increase in the amount and type of data has led to an increase in the "back end" interaction between data and data, and between apps and apps. Relying on each other, even a simple web browsing behavior actually requires the coordination and cooperation of the two.

If the interaction between people and browsers and apps is the user entrance, then the links and jumps between APIs support the actual operation of the Internet. In fact, this is also part of the Agent. Ordinary users do not need to understand terms such as command lines and APIs. You can achieve your goal.

The same is true for LLM. Now users can go a step further and do not even need to search. The whole process can be described in the following steps:

- The user opens a chat window;

- Users use natural language, i.e. text or voice, to describe their needs;

- LLM parses it into process-based operational steps;

- The LLM returns its results to the user.

It can be found that in this process, Google is the one that faces the greatest challenge, because users do not need to open the search engine, but various GPT-like dialogue windows, and the traffic entrance is quietly changing. This is why some people think that this The LLM revolution is going to revolutionize search engines.

So what role does AI Agent play in this?

In a nutshell, AI Agent is a specialization of LLM.

The current LLM is not AGI, that is, it is not the ideal L5 organizer of OpenAI. Its capabilities are greatly limited. For example, it is easy to hallucinate if too much user input information is consumed. One of the important reasons is the training mechanism. For example, if you repeatedly tell GPT 1+1=3, then there is a certain probability that the answer given in the next interaction is 4 when asked 1+1+1=?

Because the feedback of GPT comes entirely from the user. If the model is not connected to the Internet, it is entirely possible that your information will change its operating mechanism. In the future, it will become a mentally retarded GPT that only knows 1+1=3. However, if the model is allowed to connect to the Internet, Then the feedback mechanism of GPT is more diverse, after all, the majority of people on the Internet believe that 1+1=2.

To make it more difficult, if we must use LLM locally, how can we avoid such problems?

A simple and crude method is to use two LLMs at the same time, and stipulate that each time the answer is given, the two LLMs must verify each other to reduce the probability of error. If this doesn't work, there are other ways, such as having two users handle one process at a time. One is responsible for asking questions, and the other is responsible for fine-tuning the questions, trying to make the language more standardized and rational.

Of course, sometimes being connected to the Internet cannot completely avoid problems. For example, if LLM retrieves answers from people who are mentally retarded, that might be even worse. However, avoiding these materials will reduce the amount of available data. In this case, it is entirely possible to split and reorganize the existing data. It can even generate some new data based on old data to make the answer more reliable. In fact, this is the natural language understanding of RAG (Retrieval-Augmented Generation).

Humans and machines need to understand each other. If we allow multiple LLMs to understand and collaborate with each other, we are essentially touching upon the operating mode of AI Agents, that is, human agents call on other resources, even large models and other agents.

From this, we have grasped the connection between LLM and AI Agent: LLM is a collection of knowledge, and humans can communicate with it through a dialogue window, but in practice, we find that some specific task flows can be summarized as specific small programs , Bot, and command set, we define these as Agent.

AI Agent is still a part of LLM, and the two cannot be regarded as the same. The calling method of AI Agent is based on LLM, and it emphasizes the coordination of external programs, LLM and other agents. Therefore, AI Agent = LLM+API. Feeling.

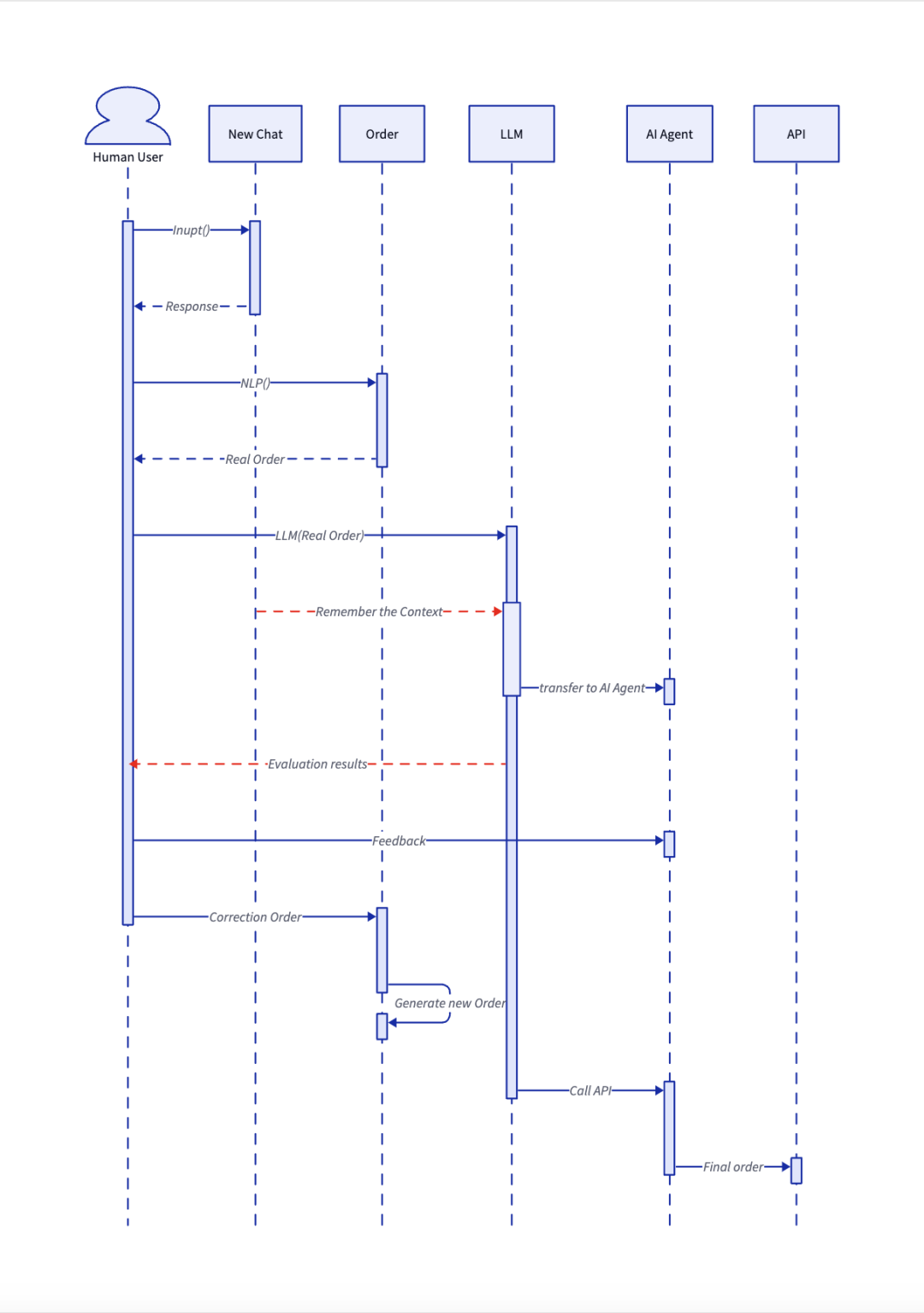

Then, in the LLM workflow, you can add the AI Agent description. Let’s take the API data of calling X as an example:

- A human user opens a chat window;

- Users use natural language, i.e. text or voice, to describe their needs;

- LLM interprets it as an API call AI Agent task and transfers the conversation authority to the Agent;

- AI Agent asks user X for his account and API password, and communicates with X online according to the user's description;

- The AI Agent returns the final result to the user.

Do you remember the evolution of human-computer interaction? The browsers and APIs that existed in Web 1.0 and Web 2.0 will still exist, but users can completely ignore their existence and only need to interact with AI Agents. The API call process is It can be used in a conversational manner, and these API services can be of any type, including local data, network information, and data from external apps, as long as the other party opens the interface and the user has permission to use it.

The complete AI Agent usage process is shown in the figure above. LLM can be regarded as a separate part from the AI Agent, or as two sub-links of a process. However, no matter how it is divided, it is serving the needs of users.

From the perspective of human-computer interaction, it is even the user talking to himself. You just need to express your thoughts and ideas, and AI/LLM/AI Agent will guess your needs again and again. The addition of feedback mechanism, And requiring LLM to remember the current context can ensure that the AI Agent will not suddenly forget what it is doing.

In short, AI Agent is a more personalized product, which is the essential difference between it and traditional scripts and automation tools. It is like a private butler who considers the real needs of users. However, it must be pointed out that this personality is still a probabilistic one. As a result of speculation, L3 AI Agent does not have human-level understanding and expression capabilities, so connecting it to external APIs is fraught with danger.

After AI framework monetization

The fact that AI frameworks can be monetized is an important reason why I am interested in Crypto. In the traditional AI technology stack, frameworks are not very important, at least not as important as data and computing power, and it is difficult to monetize AI products from frameworks. After all, most AI algorithms and model frameworks are open source products, and the truly closed source is sensitive information such as data.

In essence, an AI framework or model is a container and combination of a series of algorithms, just like an iron pot for stewing a goose. However, the type of goose and the control of the heat are the key to differentiating the taste. It should be the big goose, but now Web3 customers have come, and they want to buy the casket and return the pearl, buy the pot and abandon the goose.

The reason is not complicated. Web3 AI products are basically copycats. They are all customized products based on existing AI frameworks, algorithms, and products. Even the technical principles behind different Crypto AI frameworks are not much different. Since they cannot be distinguished technically, it is necessary to make some changes in terms of names, application scenarios, etc. Therefore, some subtle adjustments to the AI framework itself have become the support for different tokens, thus creating a framework bubble for Crypto AI Agent.

Since you don't need to invest heavily in training data and algorithms, the name distinction method is particularly important. No matter how cheap DeepSeek V3 is, it still requires a lot of effort, GPU, and electricity.

In a sense, this is also the consistent style of Web3 recently, that is, the token issuance platform is more valuable than the token, Pump.Fun/Hyperliquid is like this. Agents should be applications and assets, but Agent issuance frameworks have become the most popular. product.

In fact, this is also a way of value anchoring. Since there is no distinction between various types of Agents, the Agent framework is more stable and can produce a value siphon effect for asset issuance. This is the current 1.0 version of the combination of Crypto and AI Agent.

Version 2.0 is emerging, which is typically a combination of DeFi and AI Agent. The concept of DeFAI is of course a market behavior stimulated by heat, but if we take the following into account, we will find that it is different:

- Morpho is challenging old lending products like Aave;

- Hyperliquid is replacing dYdX’s on-chain derivatives and even challenging Binance’s CEX listing effect;

- Stablecoins are becoming a payment tool in off-chain scenarios.

It is in the context of DeFi’s evolution that AI is improving the basic logic of DeFi. If the biggest logic of DeFi before was to verify the feasibility of smart contracts, then AI Agent will change the manufacturing logic of DeFi. You don’t need to understand DeFi. Only then can DeFi products be created, which is the underlying empowerment that goes one step further than chain abstraction.

The era when everyone is a programmer is coming. Complex calculations can be outsourced to the LLM and API behind the AI Agent, and individuals only need to focus on their own ideas. Natural language can be efficiently converted into programming logic.

Conclusion

This article does not mention any Crypto AI Agent tokens and frameworks, because Cookie.Fun has done a good enough job, AI Agent information aggregation and token discovery platform, then AI Agent framework, and finally Agent generation that comes and goes. Coin, continuing to list information in the text has no value.

However, during this period of observation, the market still lacks a real discussion on what Crypto AI Agent points to. We cannot always discuss pointers; memory changes are the essence.

It is the ability to continuously convert various types of assets that is the charm of Crypto.