Author: Blockpunk @ Trustless Labs

Review: 0xmiddle

Source: Content Association - Investment Research

💡Editor’s recommendation:

In the world of blockchain, decentralized computing is a promised land that is hard to reach. Traditional smart contract platforms such as Ethereum are limited by high computing costs and limited scalability, and the new generation of computing architecture is trying to break this limitation. AO and ICP are the two most representative paradigms at present, one with modular decoupling and unlimited scalability as the core, and the other emphasizing structured management and high security.

The author of this article, Mr. Blockpunk, is a researcher at Trustless Labs and an OG of the ICP ecosystem. He has created the ICP League incubator and has been working in the technology and developer community for a long time. He also has a positive attention and deep understanding of AO. If you are curious about the future of blockchain and want to know what a truly verifiable and decentralized computing platform will look like in the AI era, or if you are looking for new public chain narratives and investment opportunities, this article is definitely worth reading. It not only provides a detailed analysis of the core mechanisms, consensus models, and scalability of AO and ICP, but also explores in depth their comparisons in terms of security, decentralization, and future potential.

In this ever-changing crypto industry, who is the real "world computer"? The outcome of this competition may determine the future of Web3. Read this article to get a first-hand understanding of the latest landscape of decentralized computing!

Combining with AI has become a hot trend in today's crypto world, and countless AI Agents have begun to issue, hold and trade cryptocurrencies. The explosion of new applications is accompanied by the demand for new infrastructure, and verifiable and decentralized AI computing infrastructure is particularly important. However, smart contract platforms represented by ETH and decentralized computing power platforms represented by Akash and IO cannot meet both verifiable and decentralized requirements at the same time.

In 2024, the team of Arweave, a well-known decentralized storage protocol, announced the AO architecture, a decentralized general computing network that supports fast and low-cost expansion, so it can run many tasks that require high computing, such as the reasoning process of AI Agents. The computing resources on AO are organically integrated by AO's message transmission rules. Based on Arweave's holographic consensus, the call order and content of the request are recorded in an unalterable manner, allowing anyone to obtain the correct state by recalculating, which realizes the verifiability of the calculation under optimistic security guarantees.

AO's computing network no longer reaches consensus on the entire computing process, which ensures the flexibility and high efficiency of the network; the processes (which can be regarded as "smart contracts") run in the Actor model and interact through messages without maintaining a shared state data. It sounds similar to the design of DFINITY's Internet Computer ICP, which achieves similar goals through a subnet that structures computing resources. Developers often compare the two. This article will mainly compare the two protocols.

Consensus Computing and General Computing

The idea of ICP and AO is to achieve flexible expansion of computing by decoupling consensus from computing content, thereby providing cheaper computing and handling more complex problems. In contrast, in traditional smart contract networks, represented by Ethereum, all computing nodes in the entire network share a common state memory, and any calculation that changes the state requires all nodes in the network to perform repeated calculations at the same time to reach a consensus. Under this fully redundant design, the uniqueness of the consensus is guaranteed, but the cost of computing is very high, and it is difficult to expand the computing power of the network, and it can only be used to process high-value businesses. Even in high-performance public chains such as Solana, it is difficult to afford the intensive computing requirements of AI.

As general computing networks, AO and ICP do not have a globally shared state memory, so there is no need to reach a consensus on the state-changing operation process itself. Only the order of execution of transactions/requests is reached, and then the calculation results are verified. Based on the optimistic assumption of the security of node virtual machines, as long as the input request content and order are consistent, the final state will also be consistent. The calculation of state changes of smart contracts (called "containers" in ICP and "processes" in AO) can be performed in parallel on multiple nodes at the same time, without requiring all nodes to calculate exactly the same tasks at the same time. This greatly reduces the cost of computing and increases the ability to expand capacity, so it can support more complex businesses and even the decentralized operation of AI models. Both AO and ICP claim to have "infinite expansion", and we will compare the differences later.

Since the network no longer maintains a large public state data together, each smart contract is considered to be able to handle transactions individually, and smart contracts interact through messages, which is an asynchronous process. Therefore, decentralized general computing networks often adopt the Actor programming model, which makes it less composable between contract businesses than smart contract platforms such as ETH, which brings certain difficulties to DeFi, but it can still be solved using specific business programming specifications. For example, the FusionFi Protocol on the AO network uses a unified "bill-settlement" model to standardize DeFi business logic and achieve interoperability. In the early stages of the AO ecosystem, such a protocol can be said to be quite forward-looking.

How AO is implemented

AO is built on the Arweave permanent storage network and runs through a new node network. Its nodes are divided into three groups: message units MG, computing units CU and scheduling units SU.

Smart contracts in the AO network are called "processes", which are a set of executable codes that are uploaded to Arweave for permanent storage.

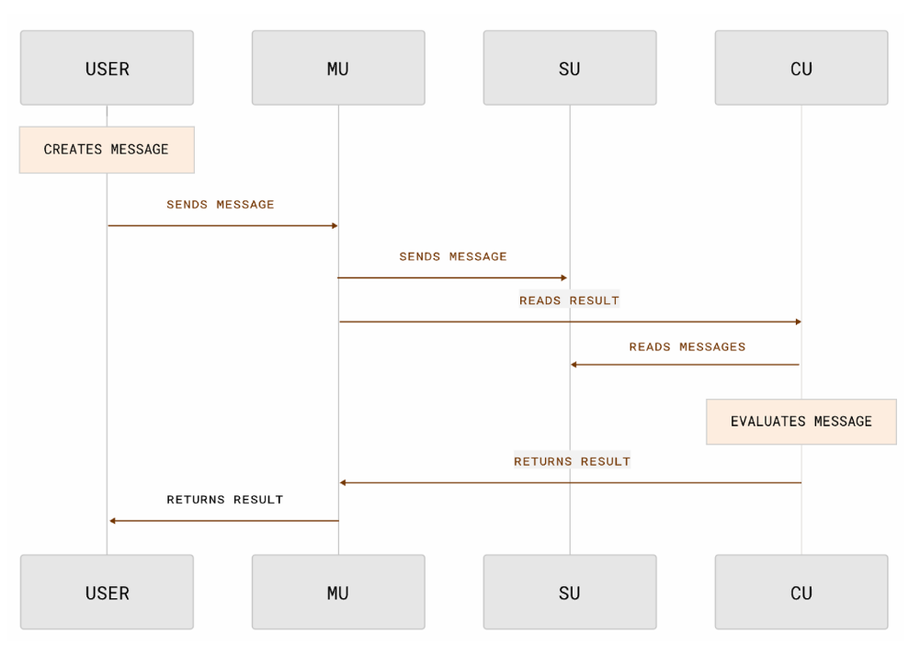

When a user needs to interact with a process, he or she can sign and send a request. AO standardizes the format of the message, and the message is received by AO's message unit MU, which verifies the signature and forwards it to the scheduling unit SU. SU continuously receives requests, assigns a unique number to each message, and then uploads the result to the Arweave network, which will reach a consensus on the transaction order. After the consensus on the transaction order is reached, the task is assigned to the computing unit CU. CU performs specific calculations, changes the state value, returns the result to MU, and finally forwards it to the user, or enters SU as a request for the next process.

SU can be seen as the connection point between AO and AR consensus layers, while CU is a decentralized computing power network. It can be seen that the consensus and computing resources in the AO network are completely decoupled. Therefore, as long as more and higher-performance nodes join the CU group, the entire AO will gain stronger computing power, support more processes and more complex process operations, and can achieve flexible on-demand supply in terms of scalability.

So, how to ensure the verifiability of its calculation results? AO chose an economic approach. CU and SU nodes need to pledge certain AO assets. CU competes through factors such as computing performance and price, and earns income by providing computing power.

Since all requests are recorded in the Arweave consensus, anyone can restore the state changes of the entire process by tracing the requests one by one. Once a malicious attack or calculation error is found, a challenge can be launched to the AO network, and the correct result can be obtained by introducing more CU nodes to repeat the calculation. The AO staked by the wrong node will be confiscated. Arweave does not verify the status of the process running in the AO network, but only faithfully records the transaction. Arweave has no computing power, and the challenge process is carried out in the AO network. The process on AO can be regarded as a "sovereign chain" with autonomous consensus, and Arweave can be regarded as its DA (data availability) layer.

AO gives developers complete flexibility. Developers can freely choose nodes in the CU market, customize the virtual machine that runs the program, and even the consensus mechanism within the process.

How to implement ICP

Unlike AO, which decouples multiple node groups according to resources, ICP uses relatively consistent data center nodes at the bottom layer and provides structured resources for multiple subnets, from bottom to top: data center, node, subnet, and software container.

The bottom layer of the ICP network is a series of decentralized data centers that run ICP client programs, which virtualize a series of nodes with standard computing resources based on performance. These nodes are randomly combined by ICP's core governance code NNS to form a subnet. Nodes process computing tasks, reach consensus, produce and propagate blocks under the subnet. Nodes within the subnet reach consensus through optimized interactive BFT.

There are multiple subnets in the ICP network at the same time. A group of nodes only runs one subnet and maintains internal consensus. Different subnets produce blocks in parallel at the same rate, and subnets can interact through cross-subnet requests.

In different subnets, node resources are abstracted into "containers" and services run in containers. The subnet does not have a large shared state. The container only maintains its own state and has a maximum capacity limit (limited by the wasm virtual machine). The state of the container in the network is not recorded in the subnet block.

In the same subnet, computing tasks are run redundantly on all nodes, but in parallel between different subnets. When the network needs to be expanded, ICP's core governance system NNS will dynamically add and merge subnets to meet usage needs.

AO vs ICP

Both AO and ICP are built around the Actor message passing model, which is a typical framework for concurrent distributed computing networks. They also use WebAssembly as the execution virtual machine by default.

Unlike traditional blockchains, AO and ICP do not have the concept of data and chain. Therefore, under the Actor model, the result of the virtual machine operation must be deterministic by default. Then the system only needs to ensure the consistency of transaction requests to achieve the consistency of the state value within the process. Multiple Actors can run in parallel, which provides huge space for expansion, so the computing cost is low enough to run general-purpose computing such as AI.

But in terms of overall design philosophy, AO and ICP are on completely different sides.

Structured vs Modular

The design concept of ICP is more like a traditional network model, abstracting resources from the bottom layer of the data center into fixed services, including hot storage, computing and transmission resources; while AO uses a modular design that is more familiar to encryption developers, completely separating resources such as transmission, consensus verification, computing, storage, and thereby distinguishing multiple node groups.

Therefore, for ICP, the hardware requirements for nodes in the network are very high because the minimum requirements for system consensus need to be met.

Developers must accept unified standard program hosting services, and the resources of related services are constrained in containers. For example, the maximum available memory of the current container is 4GB, which also limits the emergence of some applications, such as running larger-scale AI models.

ICP also attempts to meet diverse needs by creating different and distinctive subnets, but this is inseparable from the overall planning and development of the DFINITY Foundation.

For AO, CU is more like a free computing power market, where developers can choose the specifications and quantity of nodes to use based on their needs and price preferences. Therefore, developers can run almost any process on AO. At the same time, this is more friendly to the participants of the node, and CU and MU can also achieve independent expansion, with a high degree of decentralization.

AO has a high degree of modularity and supports customization of virtual machines, transaction sorting models, messaging models, and payment methods. Therefore, if developers need a private computing environment, they can choose CU in the TEE environment without waiting for official development of AO. Modularity brings more flexibility and reduces the cost of entry for some developers.

Security

ICP relies on subnets to run. When the process is hosted on a subnet, the calculation process is executed on all subnet nodes, and the status verification is completed by the improved BFT consensus between all subnet nodes. Although a certain degree of redundancy is created, the security of the process is completely consistent with the subnet.

Within a subnet, when two processes call each other, if the input of process B is the output of process A, there is no need to consider additional security issues. Only when it crosses two subnets, the security difference between the two subnets needs to be considered. Currently, the number of nodes in a subnet is between 13-34, and its final deterministic formation time is 2 seconds.

In AO, the calculation process is delegated to CUs selected by developers in the market. In terms of security, AO has chosen a more token economics solution, requiring CU nodes to stake $AO, and the default calculation results are credible. AO records all requests on Arweave through consensus, so anyone can read the public records and verify the correctness of the current state by repeating the calculation step by step. If a problem occurs, more CUs can be selected in the market to participate in the calculation, obtain a more accurate consensus, and confiscate the stake of the wrong CU.

This completely unbinds consensus and computing, allowing AO to achieve scalability and flexibility far superior to ICP. Without the need for verification, developers can even calculate on their local devices by simply uploading commands to Arweave through SU.

However, this also brings problems to the mutual calls between processes, because different processes may be under different security guarantees. For example, process B has 9 CUs for redundant calculations, and process A has only one CU running. If process B wants to accept the request from process A, it must consider whether process A will transmit the wrong result. Therefore, the interaction between processes is affected by security. This also leads to a long time for the final certainty to be formed, and it may take up to half an hour to wait for Arweave's confirmation cycle. The solution is to set a minimum number and standard of CUs, and require different final confirmation times for transactions of different values.

However, AO has an advantage that ICP does not have, that is, it has a permanent storage containing all transaction history. Anyone can replay the status at any time. Although AO does not have the traditional block and chain model, this is more in line with the idea of everyone's verification in encryption; but in ICP, the subnet nodes are only responsible for calculation and consensus on the results, and do not store every transaction request. Therefore, historical information cannot be verified. In other words, ICP does not have a unified DA. If a container chooses to delete the container after doing evil, then the crime will be untraceable. Although the developers of ICP spontaneously established a series of ledger containers that record call records, it is still difficult for encryption developers to accept.

The degree of decentralization of ICP has always been criticized. System-level work such as node registration, subnet creation and merging requires a governance system called "NNS" to decide. ICP holders need to participate in NNS through staking. At the same time, in order to achieve general computing power under multiple copies, the hardware requirements for nodes are also very high. This brings an extremely high threshold for participation. Therefore, the realization of new functions and features of ICP depends on the exit of new subnets, which must be governed by NNS, and further, must be promoted by the DFINITY Foundation, which holds a large number of voting rights.

The idea of AO's complete decoupling returns more rights to developers. An independent process can be regarded as an independent subnet, a sovereign L2, and developers only need to pay fees. The modular design also makes it easier for developers to introduce new features. For node providers, the cost of participation is also lower than that of ICP.

at last

The ideal of a world computer is great, but there is no optimal solution. ICP has better security and can achieve fast finality, but the system is more complex, subject to more restrictions, and it is difficult to gain the approval of crypto developers in some aspects of the design; AO's highly decoupled design makes expansion easier and provides more flexibility, which will be loved by developers, but there are also security complexities.

Let's look at it from a development perspective. In the ever-changing world of cryptocurrencies, it is difficult for a paradigm to maintain absolute dominance for a long time, even for ETH (Solana is catching up). Only by being more decoupled and modularized to facilitate replacement can we evolve quickly in the face of challenges, adapt to the environment, and survive. As a latecomer, AO will become a strong competitor in decentralized general computing, especially in the field of AI.